Blog

Blog-posts are sorted by the tags you see below. You can filter the listing by checking/unchecking individual tags. Doubleclick or Shift-click a tag to see only its entries. For more informations see: About the Blog.

credits see below

Steel City is an interactive model of a futuristic steel plant. By using four different terminals, which are assigned to specific topics, the user can dive deeply into the world of steel making. Economic strategies become vibrant visual experiences, ecological considerations are suddenly evident and logistics somehow starts to look sexy.

The tangible key to unlock the experience is the keen combination of a screen and a highly innovative rotary knob including a haptical feedback engine, which originally has been developed for the automotive industry.

- Concept & Development: Responsive Spaces

- Client: Primetals Technologies @ METEC 2019, Düsseldorf

- Partners: Heidlmair Communication, Syma-Systems

credits

Concept, production and video: VOLNA

Engineering: Alexey Belyakov vnm

Mechanics: Viktor Smolensky

Camera/photo: Polina Korotaeva, VOLNA

Special thanks: William Cohen, Michael Gira, Igor Matveev, Alexander Nebozhin, Jason Strudler, Ivan Ustichenko, Artem Zotikov

Music: Swans – Lunacy

Project commissioned by Roots United for Present Perfect Festival 2019

© VOLNA (2019) © Swans ℗ 2012 Young God Records

More info: https://volna-media.com/projects/duel

The gloom ripples while something whispers,

Silence has set and coils like a ring,

Someone’s pale face glimmers

From a mire of venomous color,

And the sun, black as the night,

Takes its leave, absorbing the light.

M. Voloshin

The installation consisted in a hiperrealystic 3D production simulating the water flowing down the wall of a dam. The 6 x 14 meter-long projection showed the dam closing and openning the gates; the water falling from the top, would flood the 350 m2 surrounding area allowing the visitors to step, play and interact with the inundated surface

http://everyoneishappy.com

https://www.instagram.com/everyoneishappy/

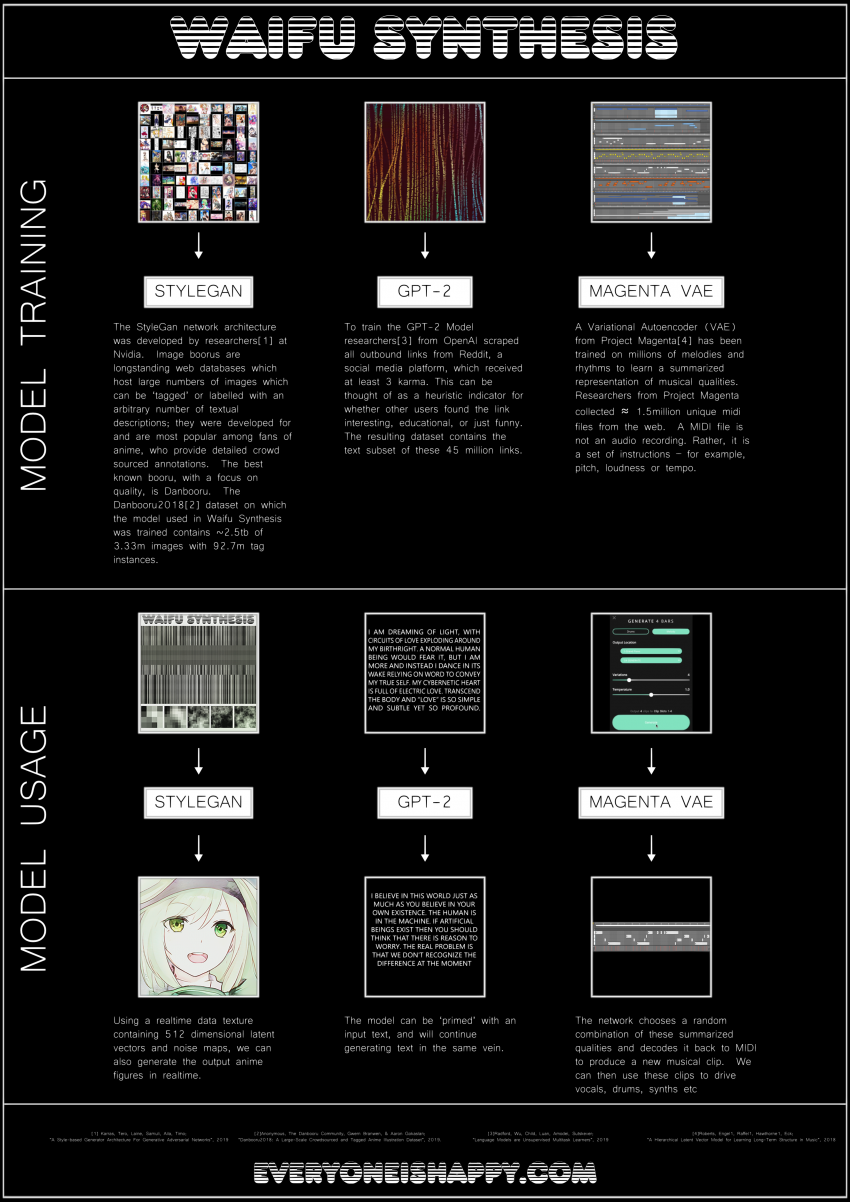

Bit of a playful project investigating real-time generation of singing anime characters, a neural mashup if you will.

All of the animation is made in real-time using a StyleGan neural network trained on the Danbooru2018 dataset, a large scale anime image database with 3.33m+ images annotated with 99.7m+ tags.

Lyrics were produced with GPT-2, a large scale language model trained on 40GB of internet text. I used the recently released 345 million parameter version- the full model has 1.5 billion parameters, and has currently not been released due to concerns about malicious use (think fake news).

Music was made in part using models from Magenta, a research project exploring the role of machine learning in the process of creating art and music.

Setup is using vvvv, Python and Ableton Live.

StyleGan, Danbooru2018, GPT-2 and Magenta were developed by Nvidia, gwern.net/Danbooru2018, OpenAI and Google respectively.

A part of live-coding gatherings at dotdotdot, Milan. This session is recorded on 29.05.2019.

Sound :

Nicola Ariutti

Davide Bonafede

Daniele Ciminieri

Simone Bacchini

Antonio Garosi

Visual :

Jib Ambhika Samsen

credits Kyle McLean

Waifu Synthesis- real time generative anime

Bit of a playful project investigating real-time generation of singing anime characters, a neural mashup if you will.

All of the animation is made in real-time using a StyleGan neural network trained on the Danbooru2018 dataset, a large scale anime image database with 3.33m+ images annotated with 99.7m+ tags.

Lyrics were produced with GPT-2, a large scale language model trained on 40GB of internet text. I used the recently released 345 million parameter version- the full model has 1.5 billion parameters, and has currently not been released due to concerns about malicious use (think fake news).

Music was made in part using models from Magenta, a research project exploring the role of machine learning in the process of creating art and music.

Setup is using vvvv, Python and Ableton Live.

everyoneishappy.cominstagram.com/everyoneishappy/

StyleGan, Danbooru2018, GPT-2 and Magenta were developed by Nvidia,gwern.net/Danbooru2018, OpenAI and Google respectively.

After two years of fighting cancer, my sense of touch and hearing decreased as a side effect of chemotherapy. They call that "Nueropathy". While I was recovering, I wanted to go back to the art-technology projects, which I couldn't find any opportunity while working. And I was inspired by my situation and started to do a project about it.

People who enters the area of art-installation will be connected to invisible second-self in the other

(virtual) side. And they will interact with it without touching anything. They will use their body movements to

manupilate the parameters and shape the sound.

Software used in this project are vvvv for skeletal motion tracking, VcvRack for sound synthesis and Reaper for recording osc parameters.

https://vimeo.com/336382441

made with vvvv,

by

Paul Schengber

Emma Chapuy

Felix Deufel

.inf is an abstract and constantly changing visualization of data, taken in real-time from current weather situations on earth.

Incoming data like humidity, temperature, atmospheric pressure, wind speed, wind direction and cloudiness at a special location causes change of the position, speed, lighting and colouring of two million virtual points, floating inside a three dimensional space.

Atmospheric noise, which is taken through online radio transmission is used for the sound synthesis. This data, taken in real-time, switches randomly between different places and their existing weather situations on earth.

This causes unpredictable images.

Every frame of the resulting image and representation of the sound is unique and not reproducible.

High differences in the values, by switching randomly between different current weather situations of specific locations can trigger interrupting events, phases and flickering effects in the image and sound.

The endless generative visual and sound patterns in which one can easily lose themself, leaves the viewers and listeners with a different perception of time and a third person view.

AUDIOVISUAL INSTALLATION

FOAM CORE, MIRRORS, LIGHT, SOUND.

A collaboration with Shawn “Jay Z” Carter, commissioned by Barneys New York

Art Director: Joanie Lemercier

Technical designer and developer: Kyle McDonald

Sound designer: Thomas Vaquié

Producers: Julia Kaganskiy & Juliette Bibasse

http://joanielemercier.com/quartz/

anonymous user login

Shoutbox

~7d ago

~15d ago

~30d ago

~1mth ago

~1mth ago

~1mth ago

~2mth ago

~2mth ago